Simulation’s Role in ADAS/AD System Development

Simulation comes with a purpose. It tries to resemble the appearance, behavior and physical properties of real-world scenes and scenarios (environment simulation), the functionality of perception and control devices, or the properties of complex systems like vehicle dynamics (system simulation). Here, we understand it as a means to accelerate and improve the development of real-world systems that are targeted for interacting with real-world environments or for acting as a “broker” between a human and this human’s real-world environment.

Our primary focus – for now – will be the simulation of environments in the context of mobility solutions. The majority of those will be ground-based and road- or rail-bound. We do currently not look into airborne or maritime solutions although we are well aware that many of the tools assessed by our initiative may also be used for these applications.

Systems for the Automotive Sector

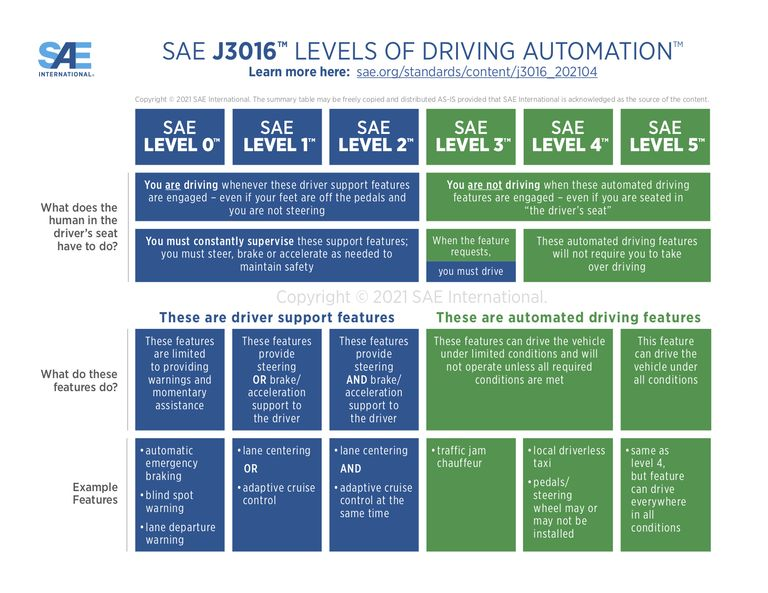

In the automotive sector, one of the key factors for the focus on environment simulation in recent years has been the emergence of active safety systems, Advanced Driver Assist Systems (ADAS) and Automated Driving (AD) functions. If you look at the levels of automated driving defined in SAE J3016 you will see that the most versatile (but also error-prone) controller – the human – is going to be gradually replaced by “the machine”.

SAE lists six levels of automation. From level 0 (no automation) to level 2 (partial automation), the human still plays an active role and is, technically speaking, in control of the vehicle. From level 3 (conditional automation) to level 5 (full automation), the human acts as a mere fallback solution or no longer considered relevant (i.e. becoming a passenger).

By transforming the human’s role from driver to passenger, the system under development evolves from a mere assistant to “the one who’s in charge”. This comes with all kinds of implications, but the most prominent are the impact on safety requirements (for vehicle passengers and for everyone outside) and a drastically increased number of situations that need to be handled. An AD system may hardly be able to call for manual takeover of control by the passenger whereas an ADAS function may at any time rely on the driver being available within 2-3 seconds notice with full situation awareness.

It is in these situations where you must make sure that your AD systems work safely without any flaws; and by also having to cover the standard operating envelope it implies that the machine has to fulfill an unprecedented set of tasks in innumerable variants of situations. The higher the level of automation, the more systems based on Machine Learning (ML) tend to be involved (compared to the “classic” algorithm-driven systems with clear decision and execution trees). Since ML-based systems are, by some measure, “unknowns” trained for handling potentially “unknown” situations (something humans are really good at), you have to make sure that they will always react in a safe manner. This is achieved by training, training, training – followed by testing, testing, testing.

Training the Learning Machine

But how do you achieve the training for an ML based system? You can’t do this, as in the human case, by exposing it after a short span in supervised conditions (driving school) to real traffic, hoping it may learn from experience and still won’t do any worse than the typical human newbie – for sure it would be much worse. No, you train it by providing it with loads of relevant situations and teach it the right actions.

The situations for training need to be created up-front, and although loads of real-world footage help perform this task, they still won’t cover everything all the relevant cases. Just think of the chances of having a close – not necessarily fatal – encounter with a pedestrian and being able to record all relevant data of the vehicle (driving parameters, sensors etc.), other participants (pedestrian, other vehicles, traffic lights etc.) and environment (buildings, light conditions, weather) at that time. Even if you happen to do this once, then how do you make sure that your system can be trained to master the same situation under even slightly different conditions (weather, speed etc.)?

This is where environment simulation comes into play.

The Development Process

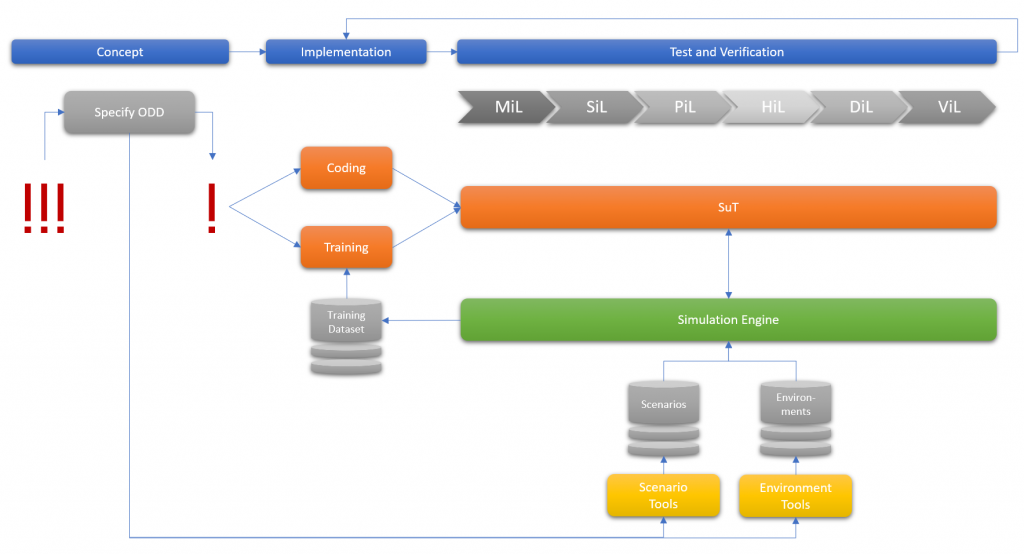

Take a look at the following figure (simplifications have been made for better readability; terms are defined in the subsequent paragraphs and in our glossary):

On top you see, from left to right, the typical phases of system development. The actual execution of the process is closer to agile development than to the V-Model that is applied to “classic” engineering solutions and to the overall development of a vehicle. Note also the feedback loop that goes from test/verification back to implementation. This indicates how you apply your “lessons learned”.

The process is initiated by an “idea” of a new system or function. Before being able to implement it, its Operational Design Domain (ODD), i.e. the set of conditions under which the system may be operated (e.g. urban/rural environment, weather, road types, speed range), needs to be defined. This will reduce the initial idea to a potentially viable idea and it will set the scope for the accompanying specifications (not shown in the figure).

From the definition of the ODD you may already be able to derive the environments and scenarios under which your system is expected to perform. Now, you either find exactly these data in your real-world footage or, more probable, you fire up your environment simulation engine and create virtual footage according to the requirements, using (sensor) models of your target platform’s expected configuration.

The implementation phase will include not only ML but also algorithmic parts. Whatever the mix, you will come up with a need to perform actual coding of your system as well as training of its ML-based portion. For the training you will use your mix of (some) labeled real footage and considerably larger amounts of virtual footage. Together, these form your training data set.

The Roles and Benefits of XiL

As soon as you have early versions of your system’s model available, you will start with testing. First, in a model-in-the-loop (MiL) setup, then in software-in-the-loop (SiL) arrangements. The complexity of the model and its implementation by means of modeling frameworks may restrict the execution speed of early testing (i.e. it may run considerably slower than real-time). But rather sooner than later, you will have models or software versions available that are capable of running in faster-than-real-time operation and on highly parallel (cloud) systems; and your environment simulation solution will have to be capable of doing the same (note: the challenge here is more on the orchestration aspect than on the actual execution – one reason why we also look at simulation frameworks and their cloud capabilities).

It will also be important for environment simulation components that provide their data to the system-under-test (SuT) in MiL and SiL configuration to fit into CI/CD platforms, i.e. software arrangements used for continuous integration/continuous deployment and automated regression testing. This leads directly to the requirement for openness, standards compliance and scalability of environment simulation solutions.

With the scalability of MiL and SiL, a large number of tests can be executed in a considerably short time frame. Overall, the vast majority of your tests will run in these setups – and will help you save massively on physical prototypes since you can start optimizing your target platform while it is still in an early or moderately advanced stage.

While the software side is making progress, you start to look into the target hardware, i.e. the processors, sensors, electronic control units (ECUs), wiring etc. Depending on the granularity of the hardware involved in testing, we speak either of processor-in-the-loop (PiL) or hardware-in-the-loop (HiL) rigs, with the latter being split into component or integration test rigs. With the hardware come two big constraints: the requirement for actual hardware (!) and that testing be performed in real-time (ie, the time between the start of two simulation steps must exactly correspond to the progress in real-world time each step is supposed to simulate); the number of tests executed is still large (tests can be run automatically) but several orders of magnitude below the MiL and SiL numbers.

Your hardware won’t be able to leave the laboratory. There won’t be fully integrated physical prototypes of the target vehicle available in early testing and it will be far too expensive to create a large enough number of them to perform repetitive testing that is required, e.g., for regression. Therefore, test rigs will be built that can concentrate on specific test tasks. Many of those might just be electronic tests but at some point, you will have to expose your system to situations that involve input from the simulated world.

Data from this world will be perceived by real or simulated sensors, and your SuT (unless it is the sensor itself) will be provided with information about the surroundings of the “ego vehicle” (i.e. the vehicle bearing or being the SuT). Only by environment simulation will you be able to expose your hardware to all possible situations it is expected to master and which you dearly wish for it to never encounter (just think of situations where vulnerable road users live up to their name – or don’t do it anymore after impact). Simply put, there are many scenarios that are either too trivial and repetitive or far too risky for an actual prototype to be exposed to. Performing these with virtual environments involved reduces cost and accelerates development.

In the hardware stage, interfacing to actual devices, signal injection capabilities and the correct representation of hardware properties (e.g. optics of a camera, scanning pattern of a LiDAR sensor, vehicle dynamics) become very important features of the environment simulation package – one of the reasons why we also take a close look at sensor simulation, openness and standards compliance.

Another key requirement for an environment simulation package in HiL operation is its stability – in terms of timing (real-time), overload management and in terms of error-free operation. Why are we emphasizing this? Because running a HiL is even more complicated than running a vehicle, and any interruption in testing due to instability of the environment simulation may require the reset of a compelete test bench with all its ECUs and other simulation components attached. Any reset will harm your throughput and may delay the start of production (SOP) of your actual system.

Ultimately, before releasing anything to the road, a system that interacts with a driver will be tested, among other, for usability in driver-in-the-loop (DiL) systems (aka driving simulators) and in safe areas (e.g. proving grounds) in vehicle-in-the-loop (ViL) systems. These systems are, again, limited by real-time constraints and by the fact that a human is involved in operating or experiencing them. Therefore, the number of tests is lower still than for the HiL systems. But DiL and ViL systems provide a safe means to expose a mockup or a real vehicle to dangerous situations (see the vulnerable road user above) in a simulated environment over-and-over again without risking any physical damage. Quite a cost – and life – saver.

As said, this is a very simplified view of the development process. But it makes clear one thing: environment simulation is involved from the very early stages until the very end. And we have not yet talked about validation and similar challenges.

The Call for Standards

It’s clear that the market for environment simulation is competitive and fragmented. Some providers offer fully integrated solutions, others provide excellent components for specialized applications. And in many cases the users will configure and integrate the XiL systems by themselves or through System Integrators (Sis), combining in-house solutions with commercial components and customized features.

It’s another fact that the costs for a simulation software package itself might be marginalized by the value of the data created with it or by the effort put into adapting and integrating a test system. Therefore, users need to be able to do two things: to continuously adapt their test systems to their needs and to continue using any assets that have been created at any point in the development process.

This is where standards come into play: data formats, communication protocols, scripting languages, component plug-in interfaces, APIs etc. are all reflected in quite a few standards around the topic of (environment) simulation. One key initiative is driven by ASAM e.V. (see also their SIM:Guide) others are found in the FMI standard etc. As a user, you need to make sure that you pick an environment simulation solution that complies with a reasonable set of standards, so that you are always in a position to select the best-fit solution for a specific task without compromising on what has already been achieved or needs to be done in the future.

Let’s shed some light on two examples: vehicle dynamics and sensor models. In vehicle dynamics, you may start with a simple model that computes fast and is of reasonable fidelity so that your SuT behaves “plausibly”, well within the operating envelope. But when it comes to covering the edge cases, you might be interested in figuring out where your vehicle will, for example, start to skid or even spin, how the chassis (with sensors attached) will move over a rough road etc. Exchanging your vehicle dynamics component even on a case-by-case basis will be easy if all variants comply with the most common standards – in this case: OpenDRIVE and OpenCRG for the road, and FMI for the solver.

For sensor models, the case is quite similar. You may start your development process with simplified (perfect) sensor models that provide you with object information with only minor computing of actual physical properties involved. These models are fast and provide a good basis for MiL and SiL simulation at scale. But once you want to figure out how your SuT’s perception system will perform under realistic conditions (weather, light, environment setup) you will need to upgrade you models. And, again, standard-compliant components will take you much further than niche solutions that rely on their own versions of the virtual environment. The standards that shall be mentioned here are, again, OpenDRIVE, OpenCRG and FMI but also OSI for communication and OGC CDB for terrain databases.

Conclusion

Environment simulation has a key role in the development of future mobility solutions. The quality of the simulation platform will have direct impact on the quality, reliability and safety of the system-under-development. Different development stages and setups call for solutions that are open, scalable, standards-compliant, easy to operate etc. Where ADAS systems may still be overridden by a driver, AD systems will have to take much better care of their passengers. This calls for ever more extensive testing of massive numbers of scenarios in order to make sure that the “unknown” can be handled in a safe manner.

Exposing ADAS and AD systems for training and testing to a large number of scenarios cannot be performed by physical tests alone. The sheer number and the risk involved in many of these tests don’t allow for prototypes to be setup and “wasted”. Therefore, it is a good assumption that 90+ percent of all tests from early development to start of production will involve simulated components from the environment.

The actual investment in an environment simulation solution might be small compared to the overall development costs of a mobility system. But the value you can create (or destroy) with your choice of a specific solution is higher than ever before. Our aim is to assist you in your selection process by providing the information we and our users have compiled in this repository.