Quantifying Simulation Quality – GSVF 2021

At GSVF 2021 we had a great discussion at a round table moderated by Alexander Braun, Hochschule Düsseldorf University of Applied Sciences, and Marius Dupuis, SimCert. Its subject: “Quantifying Simulation Quality”.

The Frame for Discussion

The motivation for calling to the table was that simulation quality per se is hard to grasp and that expected and experienced quality is mostly based on personal background and a user’s exposure to simulation.

Our goal was to identify

- relevant

- universally accepted

- broadly applicable

- quantifiable

quality criteria and certification processes.

The points for discussion at the round table were

- Clarification of Terms

- types and components of simulation

- “quality” of simulation

- interested parties

- Quantifiable Properties

- simulation vs. abstract criteria

- simulation vs. reality

- Quality Assessment

- how good is good enough?

- Certification

With this frame set, we came up with a good collection of thoughts and inspiration for further action:

The Results

Models and Tools

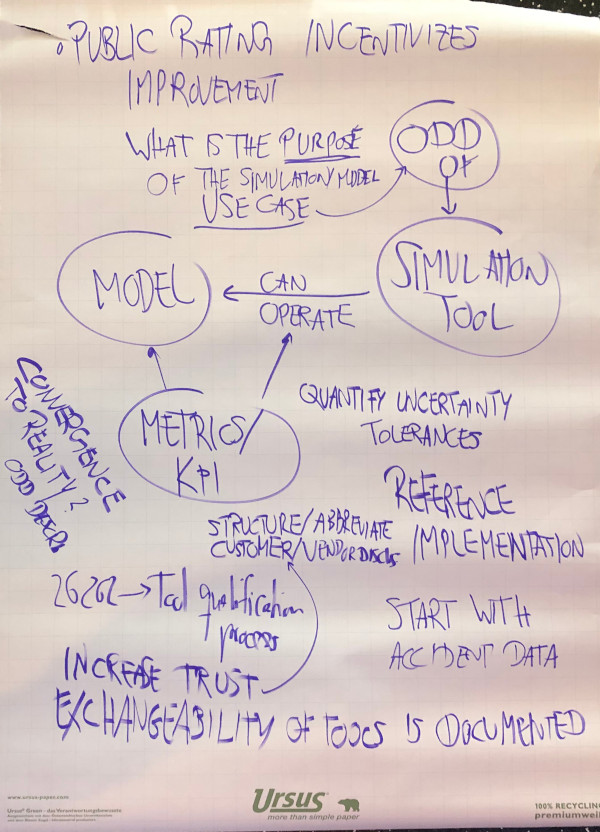

First, we have to distinguish between models and simulation tools. Models reflect the (physical) properties of the subject they are aiming to represent, and simulation tools provide the means to operate models. For both, models and tools, operational design domains (ODDs) have to be defined, indicating under which circumstances or for which tasks they may be used.

A use case defines the purpose for which a simulation tool or model shall be used. It is the primary key to matching a challenge with a potential solution. The requirements coming from the use case have to match the criteria of the respective ODDs so that a valid simulation solution may be identified.

Therefore, we have the first results in our search for quantification criteria:

- identify the models which a simulation tool is able to run

- identify the ODDs in which a tool may operate

- identify the use cases for which the ODDs are valid

In a next step, detailed metrics for these assessments have to be worked out. One path that was already identified at the round table is the development and provision of reference implementations for specific components. By this, actual solutions may be compared to an open and commonly accepted implementation that addresses key quantification criteria.

A first specific proposal was made to use accident data in order to design reference implementations of models and make sure they have accessible interfaces so that tools may easily adapt to them.

Business Relationship

Next on the agenda of the round table were the stakeholders:

The business relationship between users and vendors is at the center of the discussion. With commonly accepted KPIs for the quality of simulation tools, it will be possible to structure/abbreviate customer/vendor discussions. Instead of having to start from the basics in these discussions, the fundamental layer of information may be provided by ratings which are performed along impartial guidelines of quality quantification.

Anything a vendor provides in excess of this basis may be marketed in the same way as it is done today. Vendors retain the complete spectrum of means to highlight their unique selling points (USPs), but by fulfilling their initial qualification along the basic criteria, they may concentrate their time and efforts on the crucial parts instead of the basics.

For the users, more knowledge about potential combinations of models and tools will aid in identifying the right sub-set of suppliers for a given use case and start discussion with them instead of first having to scan the whole market. Also, users may plan ahead for exchanging tools and models according to the evolvement of their use cases.

Overall, this method is intended to increase trust in the business relationship – on both sides.

Going Public

There shall be trusted agencies or institutions which perform the quality assessment process across the market’s spectrum of simulation tools. Ultimately, authorities may set the (legal) frame for criteria that have to be fulfilled by a tool in order to be accepted for a given use case (e.g., simulation of ALKS – active lane keeping system – scenarios).

The landscape that emerged around ISO 26262 may provide a template for defining and implementing a tool qualification process.

Results of assessments shall be made public; not only for facilitating the process of matching vendors and users but also for giving vendors an immediate incentive for continuously improving their offers.

Evolution

As is the case with the models and tools, also the quantification of simulation quality is supposed to evolve over time. Any new features that emerge across the spectrum of tools – or any requirements specified by authorities – need to be quantified, and minimum qualification levels need to be defined. By constantly raising the bar, quality will have no other choice but to improve continuously, too.

Next Steps

After concluding the 45-minute-disussion in Graz, the participants agreed that it might be a good idea to find a platform for continuing the exchange of ideas. For now, this blog shall provide the very first step. Feel free to comment on its content and keep the discussion alive.

We are clearly aiming to revisit this subject at the next GSVF (August 31 – September 1, 2022) and we would be more than happy to present the community with quantifiable progress on the discussion we started this year. Your contribution in helping us achieving this goal is highly appreciated!

How to Contribute

In a first step, we want to keep the original discussion alive and grow it into a structured approach to the subject of simulation quality. You may contribute by publicly commenting on this post (see below) and/or by sending us direct email at qsq@simcert.org.